Hello Friends,

Welcome Back !!

In today’s article, we wanted to focus on one more important mathematical functions heavily used in Analytical Space – “COMPLEX FUNCTIONS“. Similar to our previous articles, heavily focused on mathematics. We highly recommend to go through below links to make yourself comfortable.

- https://ainxt.co.in/fun-with-functions-to-understand-normal-distribution/

- https://ainxt.co.in/deep-diving-into-normal-distribution-function-formula/

- https://ainxt.co.in/importance-of-taylor-series-in-deep-learning-machine-learning-models/

- https://ainxt.co.in/hidden-facts-of-cross-entropy-loss-in-machine-learning-world/

Table of Contents

Introduction

In short, Complex functions are needed to model Complex Relations. What do we mean by Complex Relations ?

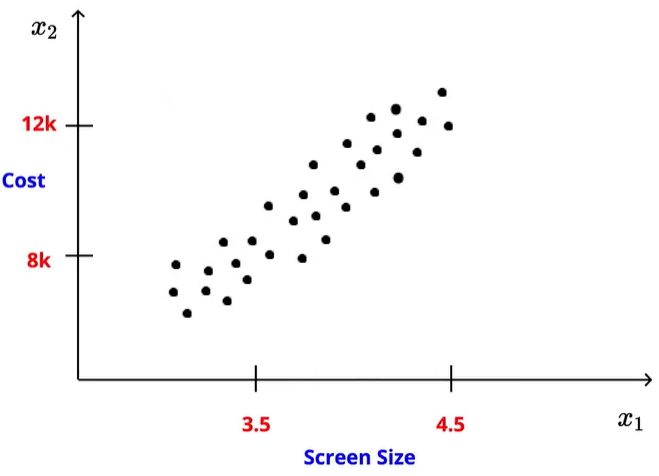

Let’s take below scenario that shows relationship between Screen-Size & Cost of a Mobile Phone. We can easily fit a linear equation (Y = a + bX) which can map the relation between these two variables.

Simple Linear Models can understand that if screen size of a phone increases then cost also increases. Often real world is not as easy as linear functions. Same Screen size vs Cost of Mobile phone details collected from real world data as below,

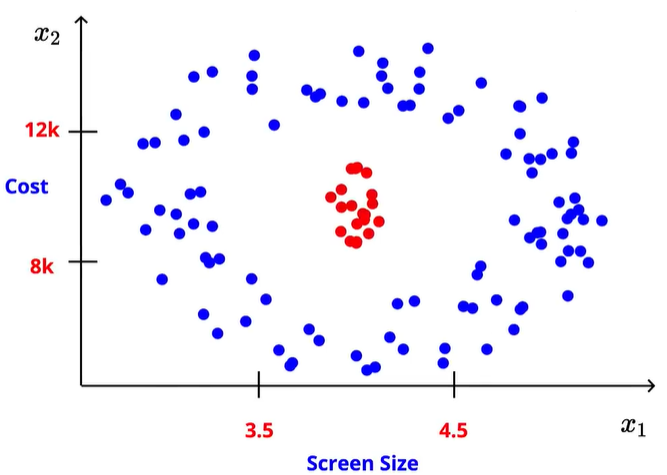

Simple Linear Models can understand that if screen size of a phone increases then cost also increases. Often real world is not as easy as linear functions. Same Screen size vs Cost of Mobile phone details collected from real world data as below,

It is clear that this data is clearly not Linearly Separable. We want a function which can clearly separates Green Vs Blue Pts. Hence we need Complex Functions to model Complex Relations.

Approaching Complex Functions

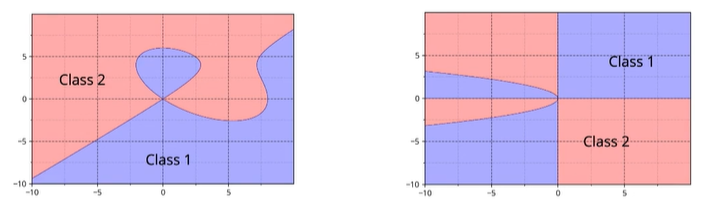

Practically it is very hard to come up with such complex functions that helps to separate different classes. We need a Simple Approach !!

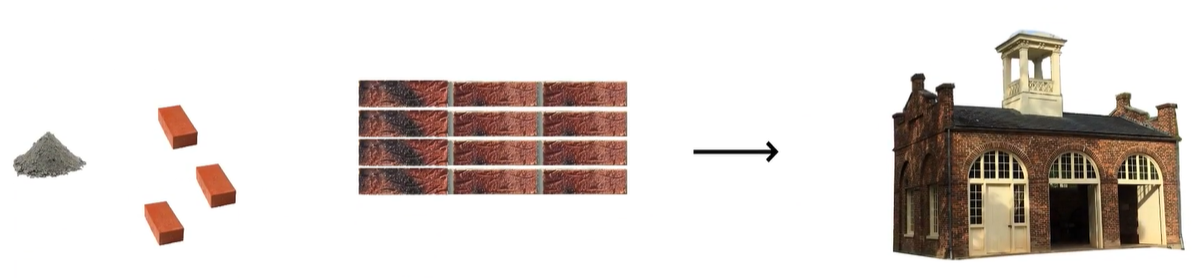

Lets take an analogy of building a House. We wont start build at once completely. We start with basic block – BRICK and do foundation. Then top of this we raise pillars and go on to make final output as shown below.

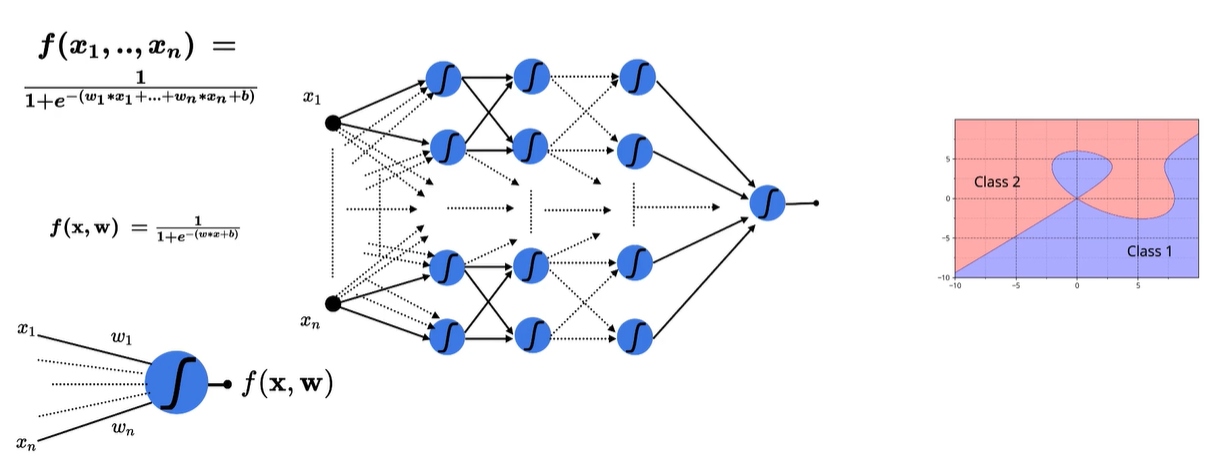

Similarly, in Deep Learning Analytics, we use “Sigmoid Functions” as our basic building block and arrange in different combinations called as “Dense Neural Network” to learn this complex functions.

This generated DNN – Final block i.e. Sigmoid neuron is nothing but learning model that captures complex functions approximation. How to prove that this Deep Neural Network using building blocks like Sigmoid activation functions will learn this complex functions ?

Universal Approximation Theorem

No matter how complex our output logic is, we can use collection of neurons and form Dense Neural Network to approximate our function. This is known as “UNIVERSAL APPROXIMATION THEOREM“.

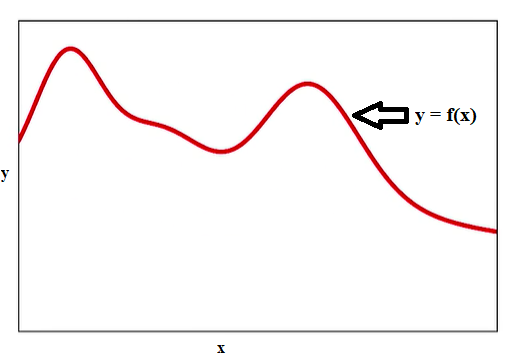

Lets take an example of Two Dimensional data where y = f(x) i.e. y is some function of x. Now, we need to find that function which maps the relationship between y & x.

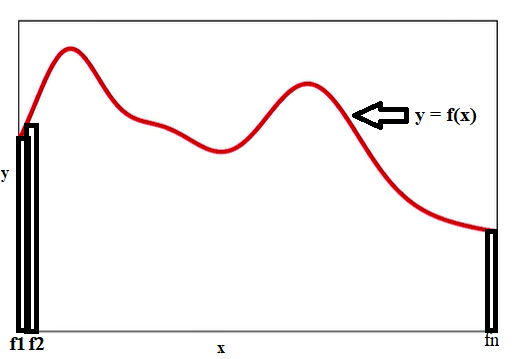

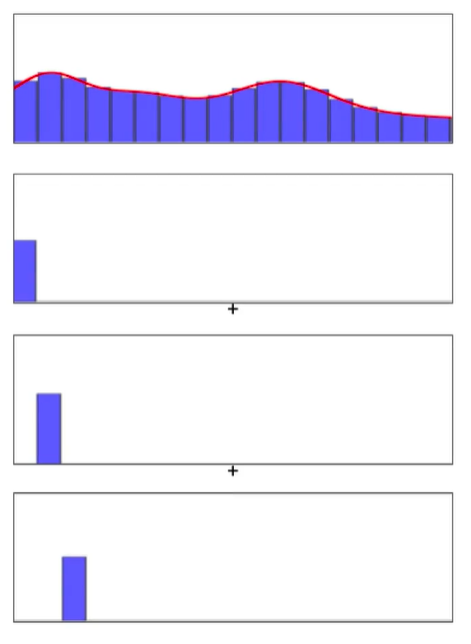

Instead looking this plot as a whole, we only take a very little portion of the data and map that portion into function(f1) which predicts 1 for that portion of plot else 0 everywhere. And we repeat this process with n different functions each responsible for its portion of data.

The key thing to note here is “We dont need to worry about coming with complex functions, instead we can have simple functions and use combinations of lot of such simple functions to approximate my true functions“.

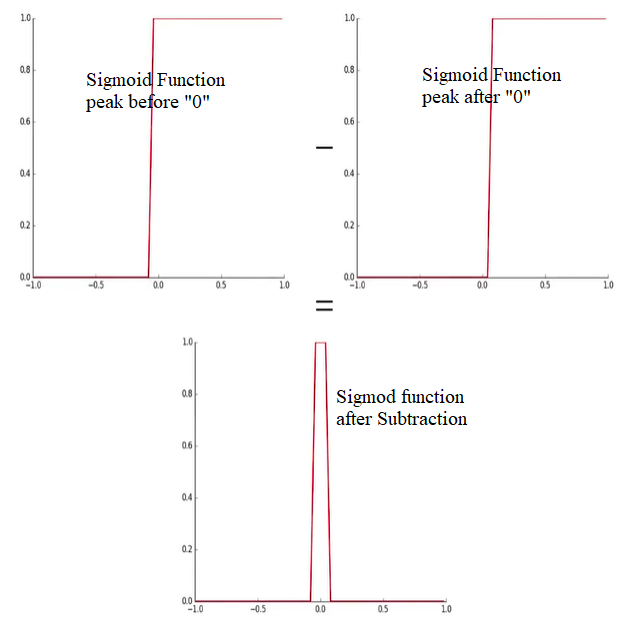

We can come up with such small step functions by addition / subtracting different sigmoid functions.

Thus, in Dense Neural Network, each of the layers are combinations of different activation functions that learns the weights such that they form small functions and combinations of many such small activation functions will try to approximate the complex relations between input & output values.

Recap

- Real world data relationship between Input & Output Variable is Non-Linear.

- We need models that can learn such Complex relations aka Complex Functions.

- Instead trying to come up with one big function that estimate relationship, we try to use simple functions and its combinations to master complex relations.

- Universal Approximate Theorem is the proof that multiple simple functions & its combinations is approximate for complex functions.

We hope you have enjoyed reading this article. Leave your comments for questions and feedback if any. Until next time, keep reading !!

- https://ainxt.co.in/how-to-do-sampling-through-random-sampling-process/

- https://ainxt.co.in/making-statistical-inference-using-central-limit-theorem/

- https://ainxt.co.in/introduction-to-collection-principles-and-applications-in-analytics/

- https://ainxt.co.in/applied-statistics-using-binomial-distribution-for-employee-attrition/

- https://ainxt.co.in/step-by-step-interview-guide-on-logistic-regression-algorithm/

[…] Universal Approximation Theorem –> https://ainxt.co.in/learning-complex-functions-using-universal-approximate-theorem/ […]

[…] couple of articles, we are getting deeper in “Deep Learning” concepts. Starting from Universal Approximation Theorem, How Gradient Descent Algorithm works & Use of Taylor Series, we discussed a […]